大家好,又见面了,我是你们的朋友全栈君。

sqoop是专门用来迁移数据的,它可以把数据库中的数据迁移到HDFS文件系统,当然也可以从HDFS文件系统导回到数据库。

我来说一下Sqoop的使用场景,假如你们公司有个项目运行好长时间了,积累了大量的数据,现在想升级项目并换种数据库进行存储原来的数据,那么我们就需要先把数据都存放到另一个地方,然后再用新数据库的语句把这些数据插入到新的数据库。在没有Sqoop之前,我们要做到这一点是很困难的,但是现在有了Sqoop,事情就变的简单多了,Sqoop是运行在Hadoop之上的一个工具,底层运用了MapReduce的技术,多台设备并行执行任务,速度当然大大提高,而且不用我们写这方面的代码,它提供了非常强大的命令,我们只需要知道怎样使用这些命令,再加上一些SQL语句就可以轻轻松松实现数据的迁移工作。

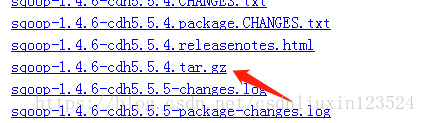

下载sqoop,地址:http://archive.cloudera.com/cdh5/cdh/5/

下面上传,解压,重命名(不详述):

xiaoye@ubuntu3:~/Downloads$ cd ..

xiaoye@ubuntu3:~$ ls

apache-activemq-5.15.3 Downloads Music Videos

classes examples.desktop Pictures zookeeper

derby.log hadoop Public zookeeper.out

Desktop hive sqoop-1.4.6-cdh5.5.4

Documents metastore_db Templates

xiaoye@ubuntu3:~$ mv sqoop-1.4.6-cdh5.5.4/ sqoop

xiaoye@ubuntu3:~$

配置环境变量:

xiaoye@ubuntu3:~$ echo $HADOOP_HOME

/home/xiaoye/hadoop

xiaoye@ubuntu3:~$ vim .bashrc

xiaoye@ubuntu3:~$ source .bashrc

xiaoye@ubuntu3:~$ sqoop

sqoop: command not found

xiaoye@ubuntu3:~$ sqoop -version

sqoop: command not found

xiaoye@ubuntu3:~$ vim .bashrc

xiaoye@ubuntu3:~$ echo $SQOOP_HOME

/home/xiaoye/sqoop

把mysql的jdbc驱动mysql-connector-java-5.1.10.jar复制到sqoop项目的lib目录下,网上随便找个版本下载吧

启动sqoop

不需要其他配置:

xiaoye@ubuntu3:~$ cd sqoop/

xiaoye@ubuntu3:~/sqoop$ cd bin

xiaoye@ubuntu3:~/sqoop/bin$ ls

configure-sqoop sqoop-export sqoop-list-tables

configure-sqoop.cmd sqoop-help sqoop-merge

sqoop sqoop-import sqoop-metastore

sqoop.cmd sqoop-import-all-tables sqoop-version

sqoop-codegen sqoop-import-mainframe start-metastore.sh

sqoop-create-hive-table sqoop-job stop-metastore.sh

sqoop-eval sqoop-list-databases

xiaoye@ubuntu3:~/sqoop/bin$ ./sqoop

Warning: /home/xiaoye/sqoop/../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

Warning: /home/xiaoye/sqoop/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/xiaoye/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Try ‘sqoop help’ for usage.

使用sqoop命令查看mysql下的数据库

xiaoye@ubuntu3:~/sqoop$ ./bin/sqoop list-databases –connect jdbc:mysql://192.168.72.133:3306/ -username root -password 12qw

Warning: /home/xiaoye/sqoop/../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

Warning: /home/xiaoye/sqoop/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/xiaoye/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

18/04/02 21:37:39 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.5.4

18/04/02 21:37:39 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

18/04/02 21:37:40 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

information_schema

hive

mysql

performance_schema

sys

查看mysql数据库下所有的表:

xiaoye@ubuntu3:~/sqoop$ ./bin/sqoop list-tables -connect jdbc:mysql://192.168.72.133:3306/mysql -username root -password 12qw

Warning: /home/xiaoye/sqoop/../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

Warning: /home/xiaoye/sqoop/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/xiaoye/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

18/04/02 22:07:18 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.5.4

18/04/02 22:07:18 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

18/04/02 22:07:18 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

columns_priv

db

engine_cost

event

func

general_log

gtid_executed

help_category

help_keyword

help_relation

help_topic

innodb_index_stats

innodb_table_stats

ndb_binlog_index

plugin

proc

procs_priv

proxies_priv

server_cost

servers

slave_master_info

slave_relay_log_info

slave_worker_info

slow_log

tables_priv

time_zone

time_zone_leap_second

time_zone_name

time_zone_transition

time_zone_transition_type

user

设置时间同步,否则可能报错,在每台机子上执行下面命令:

xiaoye@ubuntu3:~$ su root

Password:

root@ubuntu3:/home/xiaoye# cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

root@ubuntu3:/home/xiaoye# ntpdate pool.ntp.org

The program ‘ntpdate’ is currently not installed. You can install it by typing:

apt install ntpdate

root@ubuntu3:/home/xiaoye# date

Tue Apr 3 13:27:15 CST 2018

root@ubuntu3:/home/xiaoye#

数据传输:

在ubuntu3执行命令:

xiaoye@ubuntu3:~$ ./sqoop/bin/sqoop import -connect jdbc:mysql://192.168.72.133:3306/mysql –username root –password 12qw –table product

出现这个错:java.io.IOException: No columns to generate for ClassWri

百度说我刚才上传到sqoop/lib下的mysql.jar报版本不对要下个5.1.32的。这里是下载地址:http://dev.mysql.com/get/Downloads/Connector-J/mysql-connector-java-5.1.32.tar.gz

下载上传后要先解压然后再把文件夹里面的jar报复制到sqoop/lib目录下。

再次执行把mysql表数据放到hdfs的命令。

这里先说一下下面命令的含义:

首先./sqoop/bin/sqoop是我们操作sqoop最常用的命令也是功能最强大的命令。接着,import是导入的意思,接着,–connect jdbc:mysql://192.168.72.133:3306意思是以jdbc的方式连接数据库,192.168.72.133是我们的数据库所在机器的IP地址(该IP可以和集群连通)这里我的是使用ubuntu3上的mysql数据库,3306是端口。接着,sqoop是我们Student表所在的mysql数据库的名称。接着,–username root –password 12qw 是指数据库的用户名和密码。接着,–table product意思是我们要导的是Student表。当然mysql首先要创建好这个product表,大家可以先随便建一个表。

xiaoye@ubuntu3:~$ ./sqoop/bin/sqoop import -connect jdbc:mysql://192.168.72.133:3306/mysql –username root –password 12qw –table product

Warning: /home/xiaoye/sqoop/../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

Warning: /home/xiaoye/sqoop/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/xiaoye/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

18/04/05 22:19:50 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.5.4

18/04/05 22:19:50 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

18/04/05 22:19:50 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

18/04/05 22:19:50 INFO tool.CodeGenTool: Beginning code generation

18/04/05 22:19:51 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `product` AS t LIMIT 1

18/04/05 22:19:52 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `product` AS t LIMIT 1

18/04/05 22:19:52 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /home/xiaoye/hadoop

Note: /tmp/sqoop-xiaoye/compile/1627120eba0d79bbbf5a5c9d91e54096/product.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

18/04/05 22:20:03 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-xiaoye/compile/1627120eba0d79bbbf5a5c9d91e54096/product.jar

18/04/05 22:20:03 WARN manager.MySQLManager: It looks like you are importing from mysql.

18/04/05 22:20:03 WARN manager.MySQLManager: This transfer can be faster! Use the –direct

18/04/05 22:20:03 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

18/04/05 22:20:03 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql)

18/04/05 22:20:03 INFO mapreduce.ImportJobBase: Beginning import of product

18/04/05 22:20:04 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

18/04/05 22:20:04 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

18/04/05 22:20:07 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

18/04/05 22:20:07 INFO client.RMProxy: Connecting to ResourceManager at ubuntu3/192.168.72.133:8032

18/04/05 22:20:08 ERROR tool.ImportTool: Encountered IOException running import job: org.apache.hadoop.mapred.FileAlreadyExistsException: Output directory hdfs://ns/user/xiaoye/product already exists

at org.apache.hadoop.mapreduce.lib.output.FileOutputFormat.checkOutputSpecs(FileOutputFormat.java:146)

at org.apache.hadoop.mapreduce.JobSubmitter.checkSpecs(JobSubmitter.java:463)

at org.apache.hadoop.mapreduce.JobSubmitter.submitJobInternal(JobSubmitter.java:343)

at org.apache.hadoop.mapreduce.Job$10.run(Job.java:1295)

at org.apache.hadoop.mapreduce.Job$10.run(Job.java:1292)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1614)

at org.apache.hadoop.mapreduce.Job.submit(Job.java:1292)

at org.apache.hadoop.mapreduce.Job.waitForCompletion(Job.java:1313)

at org.apache.sqoop.mapreduce.ImportJobBase.doSubmitJob(ImportJobBase.java:196)

at org.apache.sqoop.mapreduce.ImportJobBase.runJob(ImportJobBase.java:169)

at org.apache.sqoop.mapreduce.ImportJobBase.runImport(ImportJobBase.java:266)

at org.apache.sqoop.manager.SqlManager.importTable(SqlManager.java:673)

at org.apache.sqoop.manager.MySQLManager.importTable(MySQLManager.java:118)

at org.apache.sqoop.tool.ImportTool.importTable(ImportTool.java:507)

at org.apache.sqoop.tool.ImportTool.run(ImportTool.java:615)

at org.apache.sqoop.Sqoop.run(Sqoop.java:143)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:179)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:218)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:227)

at org.apache.sqoop.Sqoop.main(Sqoop.java:236)

还是报错,显示hdfs已经有product目录。那么就先删掉。

xiaoye@ubuntu3:~$ ./hadoop/bin/hdsf dfs -rm /user/xiaoye/product/

-bash: ./hadoop/bin/hdsf: No such file or directory

xiaoye@ubuntu3:~$ ./hadoop/bin/hdfs dfs -rm /user/xiaoye/product/

18/04/05 22:23:38 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

rm: `/user/xiaoye/product’: Is a directory

xiaoye@ubuntu3:~$ ./hadoop/bin/hdfs dfs -rm -rf /user/xiaoye/product/

-rm: Illegal option -rf

Usage: hadoop fs [generic options] -rm [-f] [-r|-R] [-skipTrash] <src> …

xiaoye@ubuntu3:~$ ./hadoop/bin/hdfs dfs -rm -r /user/xiaoye/product/

18/04/05 22:23:53 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

18/04/05 22:23:54 INFO fs.TrashPolicyDefault: Namenode trash configuration: Deletion interval = 0 minutes, Emptier interval = 0 minutes.

Deleted /user/xiaoye/product

xiaoye@ubuntu3:~$ ./hadoop/bin/hdfs dfs -ls /user/xiaoye/

18/04/05 22:24:06 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

再次执行:又有错误如下:

java.net.ConnectException: Call From ubuntu/192.168.72.131 to localhost:9000 failed on connection ex

说是无法连接到hdfs 地址是localhost:9000.这里错困扰了我两天时间才解决。具体的解决办法可见小编的这篇博文:

https://blog.csdn.net/csdnliuxin123524/article/details/79832504

完了再次执行:

xiaoye@ubuntu3:~$ ./sqoop/bin/sqoop import -connect jdbc:mysql://192.168.72.133:3306/mysql –username root –password 12qw –table product

Warning: /home/xiaoye/sqoop/../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

Warning: /home/xiaoye/sqoop/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/xiaoye/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

18/04/05 22:34:28 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.5.4

18/04/05 22:34:28 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

18/04/05 22:34:29 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

18/04/05 22:34:29 INFO tool.CodeGenTool: Beginning code generation

18/04/05 22:34:30 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `product` AS t LIMIT 1

18/04/05 22:34:30 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `product` AS t LIMIT 1

18/04/05 22:34:30 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /home/xiaoye/hadoop

Note: /tmp/sqoop-xiaoye/compile/fcff9cdd5ba77f9b26a2108954136c8d/product.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

18/04/05 22:34:37 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-xiaoye/compile/fcff9cdd5ba77f9b26a2108954136c8d/product.jar

18/04/05 22:34:37 WARN manager.MySQLManager: It looks like you are importing from mysql.

18/04/05 22:34:37 WARN manager.MySQLManager: This transfer can be faster! Use the –direct

18/04/05 22:34:38 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

18/04/05 22:34:38 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql)

18/04/05 22:34:38 INFO mapreduce.ImportJobBase: Beginning import of product

18/04/05 22:34:38 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

18/04/05 22:34:38 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

18/04/05 22:34:41 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

18/04/05 22:34:41 INFO client.RMProxy: Connecting to ResourceManager at ubuntu3/192.168.72.133:8032

18/04/05 22:34:50 INFO db.DBInputFormat: Using read commited transaction isolation

18/04/05 22:34:50 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(`PRODUCT_ID`), MAX(`PRODUCT_ID`) FROM `product`

18/04/05 22:34:51 INFO db.IntegerSplitter: Split size: 0; Num splits: 4 from: 1 to: 1

18/04/05 22:34:51 INFO mapreduce.JobSubmitter: number of splits:1

18/04/05 22:34:52 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1522988016247_0002

18/04/05 22:34:53 INFO impl.YarnClientImpl: Submitted application application_1522988016247_0002

18/04/05 22:34:53 INFO mapreduce.Job: The url to track the job: http://ubuntu3:8088/proxy/application_1522988016247_0002/

18/04/05 22:34:53 INFO mapreduce.Job: Running job: job_1522988016247_0002

18/04/05 22:35:37 INFO mapreduce.Job: Job job_1522988016247_0002 running in uber mode : false

18/04/05 22:35:37 INFO mapreduce.Job: map 0% reduce 0%

18/04/05 22:36:14 INFO mapreduce.Job: map 100% reduce 0%

18/04/05 22:36:16 INFO mapreduce.Job: Job job_1522988016247_0002 completed successfully

18/04/05 22:36:17 INFO mapreduce.Job: Counters: 30

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=127168

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=115

HDFS: Number of bytes written=36

HDFS: Number of read operations=4

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Other local map tasks=1

Total time spent by all maps in occupied slots (ms)=32974

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=32974

Total vcore-seconds taken by all map tasks=32974

Total megabyte-seconds taken by all map tasks=33765376

Map-Reduce Framework

Map input records=1

Map output records=1

Input split bytes=115

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=132

CPU time spent (ms)=1200

Physical memory (bytes) snapshot=93220864

Virtual memory (bytes) snapshot=1938845696

Total committed heap usage (bytes)=16318464

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=36

18/04/05 22:36:17 INFO mapreduce.ImportJobBase: Transferred 36 bytes in 95.9748 seconds (0.3751 bytes/sec)

18/04/05 22:36:17 INFO mapreduce.ImportJobBase: Retrieved 1 records.

xiaoye@ubuntu3:~$

终于好了。不容易。

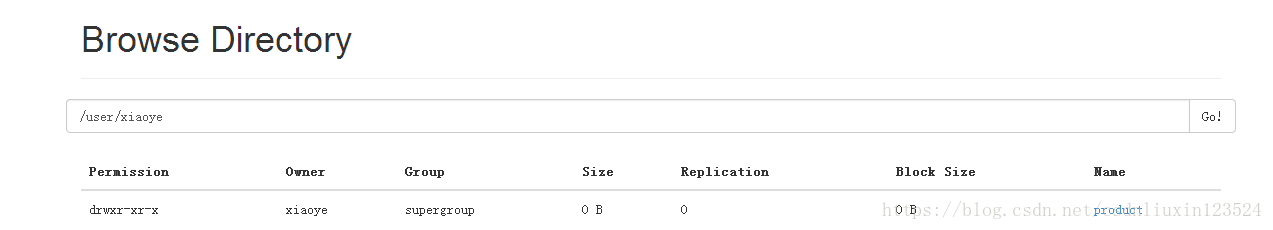

查看hdfs也有响应的目录。

分析:

命令的执行信息如下图所示,看到红色圈住的信息时说明执行成功了,这里大家发现了没有,执行过程中只有map,reduce的进度始终是0%,说明导入功能根本就没用到reduce的功能,这个其实也好理解,我们是要把数据库中的数据导入到HDFS系统,只需要多台设备同时到数据库中去读取一条一条数据然后直接上传到HDFS,根本就不需要进行合并操作。如果是要计算很多数的和的话,就要用到reduce了,显然我们的导入功能用不到reduce。

点击product,进入到product目录我们看到:

有一个-m文件。说明执行了一个map任务。

“m”代表的意思是mapper生成的文件,”r”代表的意思是reducer生成的文件。我们依次点开这四个文件,看看生成的结果是否正确。点开part-m-00000文件downLoad下载显示不容许。那我就使用命令查看:

确实有一条数据,下面是我mysql中product表的数据:

下面我们再mysql中多造几条数据,看看会有什么不同。

先删掉hdfs的product目录:

xiaoye@ubuntu3:~$ ./hadoop/bin/hdfs dfs -rm -r /user/xiaoye/product/

18/04/05 23:52:42 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

18/04/05 23:52:43 INFO fs.TrashPolicyDefault: Namenode trash configuration: Deletion interval = 0 minutes, Emptier interval = 0 minutes.

Deleted /user/xiaoye/product

在mysql中造几条数据:

执行导入命令:

xiaoye@ubuntu3:~$ ./hadoop/bin/hdfs dfs -cat /user/xiaoye/product/part-m-00000

18/04/05 23:46:41 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

1,23,32.00,23,2018-04-03 16:05:56.0

xiaoye@ubuntu3:~$ ./hadoop/bin/hdfs dfs -rm -r /user/xiaoye/product/

18/04/05 23:52:42 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

18/04/05 23:52:43 INFO fs.TrashPolicyDefault: Namenode trash configuration: Deletion interval = 0 minutes, Emptier interval = 0 minutes.

Deleted /user/xiaoye/product

xiaoye@ubuntu3:~$ ^C

xiaoye@ubuntu3:~$ ./sqoop/bin/sqoop import -connect jdbc:mysql://192.168.72.133:3306/mysql –username root –password 12qw –table product

Warning: /home/xiaoye/sqoop/../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

Warning: /home/xiaoye/sqoop/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/xiaoye/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

18/04/05 23:53:58 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.5.4

18/04/05 23:53:58 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

18/04/05 23:53:58 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

18/04/05 23:53:58 INFO tool.CodeGenTool: Beginning code generation

18/04/05 23:53:59 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `product` AS t LIMIT 1

18/04/05 23:53:59 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `product` AS t LIMIT 1

18/04/05 23:53:59 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /home/xiaoye/hadoop

Note: /tmp/sqoop-xiaoye/compile/6bc1aeebcd8a0f73350aa96099c3ce1b/product.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

18/04/05 23:54:09 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-xiaoye/compile/6bc1aeebcd8a0f73350aa96099c3ce1b/product.jar

18/04/05 23:54:09 WARN manager.MySQLManager: It looks like you are importing from mysql.

18/04/05 23:54:09 WARN manager.MySQLManager: This transfer can be faster! Use the –direct

18/04/05 23:54:09 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

18/04/05 23:54:09 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql)

18/04/05 23:54:09 INFO mapreduce.ImportJobBase: Beginning import of product

18/04/05 23:54:10 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

18/04/05 23:54:10 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

18/04/05 23:54:12 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

18/04/05 23:54:12 INFO client.RMProxy: Connecting to ResourceManager at ubuntu3/192.168.72.133:8032

18/04/05 23:54:21 INFO db.DBInputFormat: Using read commited transaction isolation

18/04/05 23:54:21 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(`PRODUCT_ID`), MAX(`PRODUCT_ID`) FROM `product`

18/04/05 23:54:21 INFO db.IntegerSplitter: Split size: 1; Num splits: 4 from: 1 to: 6

18/04/05 23:54:22 INFO mapreduce.JobSubmitter: number of splits:4

18/04/05 23:54:23 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1522988016247_0003

18/04/05 23:54:24 INFO impl.YarnClientImpl: Submitted application application_1522988016247_0003

18/04/05 23:54:24 INFO mapreduce.Job: The url to track the job: http://ubuntu3:8088/proxy/application_1522988016247_0003/

18/04/05 23:54:24 INFO mapreduce.Job: Running job: job_1522988016247_0003

18/04/05 23:55:11 INFO mapreduce.Job: Job job_1522988016247_0003 running in uber mode : false

18/04/05 23:55:11 INFO mapreduce.Job: map 0% reduce 0%

18/04/05 23:57:48 INFO mapreduce.Job: map 25% reduce 0%

18/04/05 23:58:09 INFO mapreduce.Job: map 50% reduce 0%

18/04/05 23:58:39 INFO mapreduce.Job: map 100% reduce 0%

18/04/05 23:58:49 INFO mapreduce.Job: Job job_1522988016247_0003 completed successfully

分析:执行过程中可以看到:

这里splits是分成4份,每份分配一个map任务。

最终:

同样用cat命令查看每个part文件分别有不同的数据。

4:下面,我们使用where条件来筛选数据并导入符合条件的数据,增加的参数是–where ‘ID>=3 and ID<=5’,顾名思义,就是要把ID从3到8的数据导入到服务器。

这次不删除hdfs的product文件了,我们在执行命令时换一个目录存储。这里使用-target-dir指定在hdfs中存放的目录路径。–fields-terminalted-by设置在hdfs文件中每个字段数据的分割间距方式-m 2是指指定执行2个map任务。其他的就没什么说的了

命令如下:

xiaoye@ubuntu3:~$ ./sqoop/bin/sqoop import –connect jdbc:mysql://192.168.72.133:3306/mysql –username root –password 12qw –table product –target-dir /user/xiaoye/product2 -m 2 –fields-terminated-by ‘\t’ -columns ‘product_id,product_name’ –where ‘PRODUCT_ID>=3 and PRODUCT_ID<=5’

Warning: /home/xiaoye/sqoop/../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

Warning: /home/xiaoye/sqoop/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/xiaoye/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

18/04/06 00:14:11 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.5.4

18/04/06 00:14:11 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

18/04/06 00:14:11 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

18/04/06 00:14:11 INFO tool.CodeGenTool: Beginning code generation

18/04/06 00:14:12 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `product` AS t LIMIT 1

18/04/06 00:14:12 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `product` AS t LIMIT 1

18/04/06 00:14:12 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /home/xiaoye/hadoop

Note: /tmp/sqoop-xiaoye/compile/35a66eba695dd15188c33f39f77e3bce/product.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

18/04/06 00:14:19 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-xiaoye/compile/35a66eba695dd15188c33f39f77e3bce/product.jar

18/04/06 00:14:19 WARN manager.MySQLManager: It looks like you are importing from mysql.

18/04/06 00:14:19 WARN manager.MySQLManager: This transfer can be faster! Use the –direct

18/04/06 00:14:19 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

18/04/06 00:14:19 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql)

18/04/06 00:14:20 INFO mapreduce.ImportJobBase: Beginning import of product

18/04/06 00:14:20 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

18/04/06 00:14:20 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

18/04/06 00:14:21 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

18/04/06 00:14:21 INFO client.RMProxy: Connecting to ResourceManager at ubuntu3/192.168.72.133:8032

18/04/06 00:14:28 INFO db.DBInputFormat: Using read commited transaction isolation

18/04/06 00:14:28 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(`PRODUCT_ID`), MAX(`PRODUCT_ID`) FROM `product` WHERE ( PRODUCT_ID>=3 and PRODUCT_ID<=5 )

18/04/06 00:14:28 INFO db.IntegerSplitter: Split size: 1; Num splits: 2 from: 3 to: 5

18/04/06 00:14:28 INFO mapreduce.JobSubmitter: number of splits:3

18/04/06 00:14:29 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1522988016247_0004

18/04/06 00:14:31 INFO impl.YarnClientImpl: Submitted application application_1522988016247_0004

18/04/06 00:14:32 INFO mapreduce.Job: The url to track the job: http://ubuntu3:8088/proxy/application_1522988016247_0004/

18/04/06 00:14:32 INFO mapreduce.Job: Running job: job_1522988016247_0004

18/04/06 00:15:01 INFO mapreduce.Job: Job job_1522988016247_0004 running in uber mode : false

18/04/06 00:15:01 INFO mapreduce.Job: map 0% reduce 0%

18/04/06 00:16:29 INFO mapreduce.Job: map 33% reduce 0%

18/04/06 00:16:32 INFO mapreduce.Job: map 67% reduce 0%

18/04/06 00:16:38 INFO mapreduce.Job: map 100% reduce 0%

18/04/06 00:16:40 INFO mapreduce.Job: Job job_1522988016247_0004 completed successfully

18/04/06 00:16:42 INFO mapreduce.Job: Counters: 30

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=383343

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=343

HDFS: Number of bytes written=19

HDFS: Number of read operations=12

HDFS: Number of large read operations=0

HDFS: Number of write operations=6

Job Counters

Launched map tasks=3

Other local map tasks=3

Total time spent by all maps in occupied slots (ms)=265969

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=265969

Total vcore-seconds taken by all map tasks=265969

Total megabyte-seconds taken by all map tasks=272352256

Map-Reduce Framework

Map input records=3

Map output records=3

Input split bytes=343

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=524

CPU time spent (ms)=4200

Physical memory (bytes) snapshot=287932416

Virtual memory (bytes) snapshot=5816549376

Total committed heap usage (bytes)=48955392

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=19

18/04/06 00:16:42 INFO mapreduce.ImportJobBase: Transferred 19 bytes in 140.7508 seconds (0.135 bytes/sec)

18/04/06 00:16:42 INFO mapreduce.ImportJobBase: Retrieved 3 records.

目前看是成功的,我们看界面:

成功是成功了。但是有三个map文件,与我们设定的2个map文件不一致。这里我试着执行了几次也是同样的结果,不理解。先放着吧。

4.1我们使用query语句来筛选我们的数据,这意味着我们可以导入多张表的数据,我们还是来个简单的,命令如下。我们发现使用query语句的话,就不用指定table了,由于数量很少,现在我们指定mapper的数量为1

命令:

xiaoye@ubuntu3:~$ ./sqoop/bin/sqoop import -connect jdbc:mysql://192.168.72.133:3306/mysql –username root –password 12qw –query ‘select * from product where product_id>2’ –target-dir /user/xiaoye/product8 -m 1 –fields-terminated-by ‘\t’

Warning: /home/xiaoye/sqoop/../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

Warning: /home/xiaoye/sqoop/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/xiaoye/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

18/04/06 00:56:55 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.5.4

18/04/06 00:56:55 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

18/04/06 00:56:55 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

18/04/06 00:56:55 INFO tool.CodeGenTool: Beginning code generation

18/04/06 00:56:55 ERROR tool.ImportTool: Encountered IOException running import job: java.io.IOException: Query [select * from product where product_id>2] must contain ‘$CONDITIONS’ in WHERE clause.

at org.apache.sqoop.manager.ConnManager.getColumnTypes(ConnManager.java:332)

at org.apache.sqoop.orm.ClassWriter.getColumnTypes(ClassWriter.java:1834)

at org.apache.sqoop.orm.ClassWriter.generate(ClassWriter.java:1646)

at org.apache.sqoop.tool.CodeGenTool.generateORM(CodeGenTool.java:107)

at org.apache.sqoop.tool.ImportTool.importTable(ImportTool.java:488)

at org.apache.sqoop.tool.ImportTool.run(ImportTool.java:615)

at org.apache.sqoop.Sqoop.run(Sqoop.java:143)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:179)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:218)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:227)

at org.apache.sqoop.Sqoop.main(Sqoop.java:236)

出错了:must contain ‘$CONDITIONS’ in WHERE clause.的意思是在我们的query的where条件当中必须有$CONDITIONS’这个条件,这个条件就相当于一个占位符,动态接收传过来的参数,从而查询出符合条件的结果。

修改后:

xiaoye@ubuntu3:~$ ./sqoop/bin/sqoop import -connect jdbc:mysql://192.168.72.133:3306/mysql –username root –password 12qw –query ‘select * from product where product_id>2 and $CONDITIONS’ –target-dir /user/xiaoye/product8 -m 1 –fields-terminated-by ‘\t’

Warning: /home/xiaoye/sqoop/../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

Warning: /home/xiaoye/sqoop/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/xiaoye/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

18/04/06 00:58:46 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.5.4

18/04/06 00:58:46 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

18/04/06 00:58:46 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

18/04/06 00:58:46 INFO tool.CodeGenTool: Beginning code generation

18/04/06 00:58:47 INFO manager.SqlManager: Executing SQL statement: select * from product where product_id>2 and (1 = 0)

18/04/06 00:58:47 INFO manager.SqlManager: Executing SQL statement: select * from product where product_id>2 and (1 = 0)

18/04/06 00:58:47 INFO manager.SqlManager: Executing SQL statement: select * from product where product_id>2 and (1 = 0)

18/04/06 00:58:47 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /home/xiaoye/hadoop

Note: /tmp/sqoop-xiaoye/compile/e09ff4efa56f4d5d976da9a1f26f658d/QueryResult.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

18/04/06 00:58:50 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-xiaoye/compile/e09ff4efa56f4d5d976da9a1f26f658d/QueryResult.jar

18/04/06 00:58:50 INFO mapreduce.ImportJobBase: Beginning query import.

18/04/06 00:58:50 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

18/04/06 00:58:50 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

18/04/06 00:58:51 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

18/04/06 00:58:52 INFO client.RMProxy: Connecting to ResourceManager at ubuntu3/192.168.72.133:8032

18/04/06 00:58:59 INFO db.DBInputFormat: Using read commited transaction isolation

18/04/06 00:58:59 INFO mapreduce.JobSubmitter: number of splits:1

18/04/06 00:59:00 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1522988016247_0010

18/04/06 00:59:00 INFO impl.YarnClientImpl: Submitted application application_1522988016247_0010

18/04/06 00:59:00 INFO mapreduce.Job: The url to track the job: http://ubuntu3:8088/proxy/application_1522988016247_0010/

18/04/06 00:59:00 INFO mapreduce.Job: Running job: job_1522988016247_0010

18/04/06 00:59:25 INFO mapreduce.Job: Job job_1522988016247_0010 running in uber mode : false

18/04/06 00:59:25 INFO mapreduce.Job: map 0% reduce 0%

18/04/06 00:59:53 INFO mapreduce.Job: map 100% reduce 0%

18/04/06 00:59:54 INFO mapreduce.Job: Job job_1522988016247_0010 completed successfully

18/04/06 00:59:54 INFO mapreduce.Job: Counters: 30

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=127006

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=87

HDFS: Number of bytes written=145

HDFS: Number of read operations=4

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Other local map tasks=1

Total time spent by all maps in occupied slots (ms)=25450

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=25450

Total vcore-seconds taken by all map tasks=25450

Total megabyte-seconds taken by all map tasks=26060800

Map-Reduce Framework

Map input records=4

Map output records=4

Input split bytes=87

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=95

CPU time spent (ms)=1010

Physical memory (bytes) snapshot=105885696

Virtual memory (bytes) snapshot=1939763200

Total committed heap usage (bytes)=16318464

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=145

18/04/06 00:59:54 INFO mapreduce.ImportJobBase: Transferred 145 bytes in 62.5211 seconds (2.3192 bytes/sec)

18/04/06 00:59:54 INFO mapreduce.ImportJobBase: Retrieved 4 records.

这里是oK的,同时,也只有一个map文件。这时是好用的。

打开文件看看,确实是id大于2的数据:

xiaoye@ubuntu3:~$ ./hadoop/bin/hdfs dfs -cat /user/xiaoye/product8/part-m-00000

18/04/06 01:03:00 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

3 24 32.00 23 2018-04-03 16:05:56.0

4 1www 23.00 32 2018-04-02 14:52:12.0

5 1www 22.00 33 2018-04-03 14:52:31.0

6 3 3.00 4 2018-04-04 14:52:57.0

这里我们接着吧-m 1 改成-m 2就会报错:

xiaoye@ubuntu3:~$ ./sqoop/bin/sqoop import -connect jdbc:mysql://192.168.72.133:3306/mysql –username root –password 12qw –query ‘select * from product where product_id>2 and $CONDITIONS’ –target-dir /user/xiaoye/product8 -m 2 –fields-terminated-by ‘\t’

Warning: /home/xiaoye/sqoop/../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

Warning: /home/xiaoye/sqoop/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/xiaoye/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

18/04/06 01:05:04 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.5.4

18/04/06 01:05:04 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

When importing query results in parallel, you must specify –split-by.

Try –help for usage instructions.

异常信息的意思是,我们没有指定mapper按什么规则来分割数据。即我这个mapper应该读取哪些数据,一个mapper的时候没有问题是因为它一个mapper就读取了所有数据,现在mapper的数量是2了,那么我第一个mapper读取多少数据,第二个mapper就读取第一个mapper剩下的数据,现在两个mapper缺少一个分割数据的条件,找一个唯一标识的一列作为分割条件,这样两个mapper便可以迅速知道表中一共有多少条数据,两者分别需要读取多少数据。

知道了异常的原因,我们便加上分割数据的条件,我们使用的是Student表的ID字段。命令如下。

xiaoye@ubuntu3:~$ ./sqoop/bin/sqoop import -connect jdbc:mysql://192.168.72.133:3306/mysql –username root –password 12qw –query ‘select * from product where product_id>2 and $CONDITIONS’ –target-dir /user/xiaoye/product9 -m 2 –fields-terminated-by ‘\t’ –split-by product.product_id

这样就好了。这里我们再详细说说$CONDITIONS’的作用,sqoop首先根据Student.ID将数据统计出来,然后传给$CONDITIONS’,query语句就知道一共有多条数据了,假如第一个mapper读取了2条数据,那么也会把这个2传给$CONDITIONS,这样第二个mapper在读取数据的时候便可以根据第一个mapper读取的数量读取剩下的内容。

5,hdfs数据传输到数据库中

命令如下:

xiaoye@ubuntu3:~$ ./sqoop/bin/sqoop export –connect jdbc:mysql://192.168.72.133:3306/mysql –username root –password 12qw –export-dir /user/xiaoye/product –table product2

Warning: /home/xiaoye/sqoop/../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

Warning: /home/xiaoye/sqoop/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/xiaoye/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

18/04/06 01:19:01 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.5.4

18/04/06 01:19:01 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

18/04/06 01:19:01 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

18/04/06 01:19:01 INFO tool.CodeGenTool: Beginning code generation

18/04/06 01:19:02 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `product2` AS t LIMIT 1

18/04/06 01:19:02 ERROR manager.SqlManager: Error executing statement: com.mysql.jdbc.exceptions.jdbc4.MySQLSyntaxErrorException: Table ‘mysql.product2’ doesn’t exist

com.mysql.jdbc.exceptions.jdbc4.MySQLSyntaxErrorException: Table ‘mysql.product2’ doesn’t exist

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

报错,显示表不存在,那我们就新建一个表结构一样的表

建好后再次执行:

结果如下,product2确实有数据了。

如果有朋友遇到以下错误:

Caused by: java.lang.RuntimeException: Can’t parse input data: ‘6 baiyansong 45 88.0’

at student_copy.__loadFromFields(student_copy.java:335)

at student_copy.parse(student_copy.java:268)

at org.apache.sqoop.mapreduce.TextExportMapper.map(TextExportMapper.java:83)

… 10 more

Caused by: java.lang.NumberFormatException: For input string: “6 baiyansong 45 88.0”

at java.lang.NumberFormatException.forInputString(NumberFormatException.java:65)

at java.lang.Integer.parseInt(Integer.java:492)

at java.lang.Integer.valueOf(Integer.java:582)

at student_copy.__loadFromFields(student_copy.java:317)

… 12 more

因为:可以看到是数据转换出现了异常。那么是什么原因导致的呢,其实是列与列的分隔符导致的,td5下的两个文件中的数据是以”\t”来分隔的,而sqoop默认是以”,”来分隔的,因此出现了问题。知道了问题,我们对症下药,人为指定分隔符–fields-terminated-by ‘\t’,命令如下所示。这里说明一点的是,–export-dir /sqoop/td5这个参数有些人可能会有疑惑,因为td5文件夹下除了part-m-00000和part-m-00001两个结果文件外,还有一个名为“_SUCCESS”的文件,导出的时候会不会连这个文件的内容都导出去了呢?其实不会的,我们指定到文件夹,程序会去扫描这个文件夹下的所有文件,凡是不以”_”开头的文件都会被导出去,_SUCCESS文件是以“_”开头的,因此它不会被导出去,大家放心。

发布者:全栈程序员-用户IM,转载请注明出处:https://javaforall.cn/106166.html原文链接:https://javaforall.cn

【正版授权,激活自己账号】: Jetbrains全家桶Ide使用,1年售后保障,每天仅需1毛

【官方授权 正版激活】: 官方授权 正版激活 支持Jetbrains家族下所有IDE 使用个人JB账号...